Open Call winner Tansy Xiao talks about her artistic practice and the WebSoundArt commission.

Tansy Xiao is an interdisciplinary artist based in New York. She builds interactive installations, works with new media, and creates sound and video art. For the WebSoundArt call, she created a web-based version of *Here’s the information we collect*, a piece that explores the dubious content of privacy policies. Before you continue reading, I suggest you check it out. Here is the link to the work.

Because the interdisciplinary field has such a broad range, I asked her how she sees her practice:

Tansy Xiao: I'm what people call an interdisciplinary artist. I feel like that's a very broad category these days, and it's for people who kind of wish to transcend different disciplines. The way art education and the entire academic system are set up is that they separate professions and skills. Probably even in the most liberal schools, it's difficult not to have a major. So that's how we were trained as artists. But artists are creative, and we get bored doing one thing, so we teach ourselves to do other things. So I do a little bit of extended reality, natural language processing, and coding. I'm not the best coder in the world. I taught myself. I also work with performers a lot. In this particular project, I worked with Ekmeles vocal ensemble. I'm not a performer myself, nor do I play any instruments. Instead, I create instructions for others to improvise upon.

I actually started as a curator, but that's less of a choice and more where my first jobs led me. I found it incredibly fascinating to assemble things that are very distant from each other. And then I realized that I could do that in my own work. I guess that experience, although it is kind of irrelevant to my own practice, in a way forms my way of working, of connecting seemingly irrelevant elements.

JDT: What inspires you as an artist?

TX: I’m particularly interested in language, especially the absurdity of it and the power that's embedded in it. Language could be used as a tool by the ruling class or by any person in power. I have also lived in a lot of places and I travelled a lot, so I guess there's always a sense of non belonging but at the same time I don't pursue that. Choosing not to be restricted by the value system of one discipline or one region. I think that's very important to me, no matter when I curate other people's work or create my own work. I think it's actually dangerous for artists to live in one place for too long. If possible, I encourage everyone to sojourn in different places and change their locations.

JDT: For the WebSoundArt call you’ve worked on Here’s the information we collect. What is the work about?

TX: Here’s the information we collect is a speech recognition piece that responds to the

viewer reading the privacy policy from a specific tech company. We never really pay attention to the

privacy policies when we click “Yes, I agree.” Because without that, we can't even use a product. So

we're kind of all forced to click “Agree.” Very few people actually go through the entire privacy

policy. As I was reading through it, I noticed a few very alarming things in their language, like

“search warrant” and “law enforcement.” Some people have a vague idea that the government can

occasionally step in when it comes to communication online, when they have a license or something,

but actually, we ourselves agreed on that. Involuntarily, but we did.

There's a particular case in the United States. In some of the states, abortion is still not legal.

A mother and a daughter were communicating on Facebook Messenger about an abortion, and the police

eventually stepped in and arrested them. Yeah, that's a true story. Something like that could

happen, and I just feel like, as users, we don't really have a way to protect ourselves from the

higher powers in society. So that's why I created this piece.

Domestic Language

JDT: What is the system that you are using in your work?

TX: I'm using a speech recognition library called annyang.js. But actually, the p5.speech.js library

would equally work. There are a lot of libraries that basically perform exactly the same task. The

online JavaScript speech recognition library actually uploads your speech online. So it happens in

the cloud.

Here’s the information we collect in a way forces the audience to read the privacy policy,

because that's the only way that you can interact with the project. As you read through it, the

vocal ensemble is going to say, scream, or respond to the language that the key words are producing.

Sometimes it's just deconstructing the word itself, the English language, and sometimes it's kind of

responding to it. For example, there's a key word called "communication,” but the singer was

actually singing “manipulation.” That is media theory-related: how the media communicates with the

mass and how what we can see, read, or hear is actually controlled by certain powers. We're all

predetermined by the authorities, and we think we have freedom of speech, but it's actually more

controlled and manipulated than we realize.

One thing to note is that all my projects are open source, even if sometimes I don't post them online. I don't claim the copyright to any of my work because I owe it to the internet and I want to give back. I've noticed that the so-called art world is actually more conservative in that regard compared to the tech industry because, in tech, you share maybe 90% of your code. Maybe the 10% is more valuable to certain corporations, but anything below that, people are very happy to teach newcomers, to educate people, and share their code for more people to be able to do it. Unfortunately, I feel that this generosity is something the traditional art world often lacks.

JDT: I also saw that the piece exists as an offline project?

TX: When I began researching how to do speech recognition, my initial thought was actually using

JavaScript. The only reason that I did a physical installation version with Max/MSP was because I

was doing a residency at Harvestworks, where they would

assign you a free engineer to work with you, and I thought, “Okay, I’m going to do something that I

don’t know how to do myself.” So Matthew Ostrowski, a seasoned Max/MSP engineer helped me create

this three-channel Max/MSP version that can be offline. I think that is something valuable to have

because not all the exhibition venues have internet access.

The commission from WebSoundArt gave me the opportunity to make my initial plan come true. More

people can share it and experience it online. It's more lightweight, it doesn't require particular

software to be installed, and it doesn't require the computer to be very powerful, which are all

great qualities to have for a work to be more portable and able to tour around the world. That's

very important to me.

JDT: You also had the coaches help you and support you. How was this experience?

TX: It was great to have external feedback. Some of them helped me with finalizing the project, and there were a few minor glitches that I had in the end. And some of them helped me with the concept, which was great. More than one of them actually suggested putting the instructions inside of the project, since this is a web project. I'm used to exhibiting in a gallery setting where things are on the wall. So the statement and the instructions are totally separate from the project. This time I had to actually put the instructions in the project, which was a very new experience for me. I think it's definitely helpful to consider different viewer accessibility, including the download speed, and that different people in different areas have different internet speeds. That's something to consider. Overall, it was a learning curve, and I appreciate that a lot.

JDT: Was this your first work of web-based sound art, or do you have any other works that can be considered in this category?

TX: I think the definition of sound is easy for everyone to understand. But the definition of web is

the part that I’m not sure about. I had another project that I also worked with a vocalist on,

called Control. It’s a gesture-conducted video choir that uses ml5 PoseNet.

I had selected stock charts from 2020–2022, which became the graphic notation for the singer to

sing. The audience would basically pose for the camera to trigger different singing parts. The funny

part about it is that because it's a web camera-based project, it isn’t always accurate, and that's

kind of the idea. The piece is called Control, but it's really about the lack of control in

this

economy. During that time, a lot of new investors started jumping into the stock market, hoping to

have a little bit of control over their finances. A lot of people earned money, and then at the

beginning of the next year, they lost everything, but they still had to pay for the tax on the money

that they earned in the previous year. It’s kind of a bitter laugh.

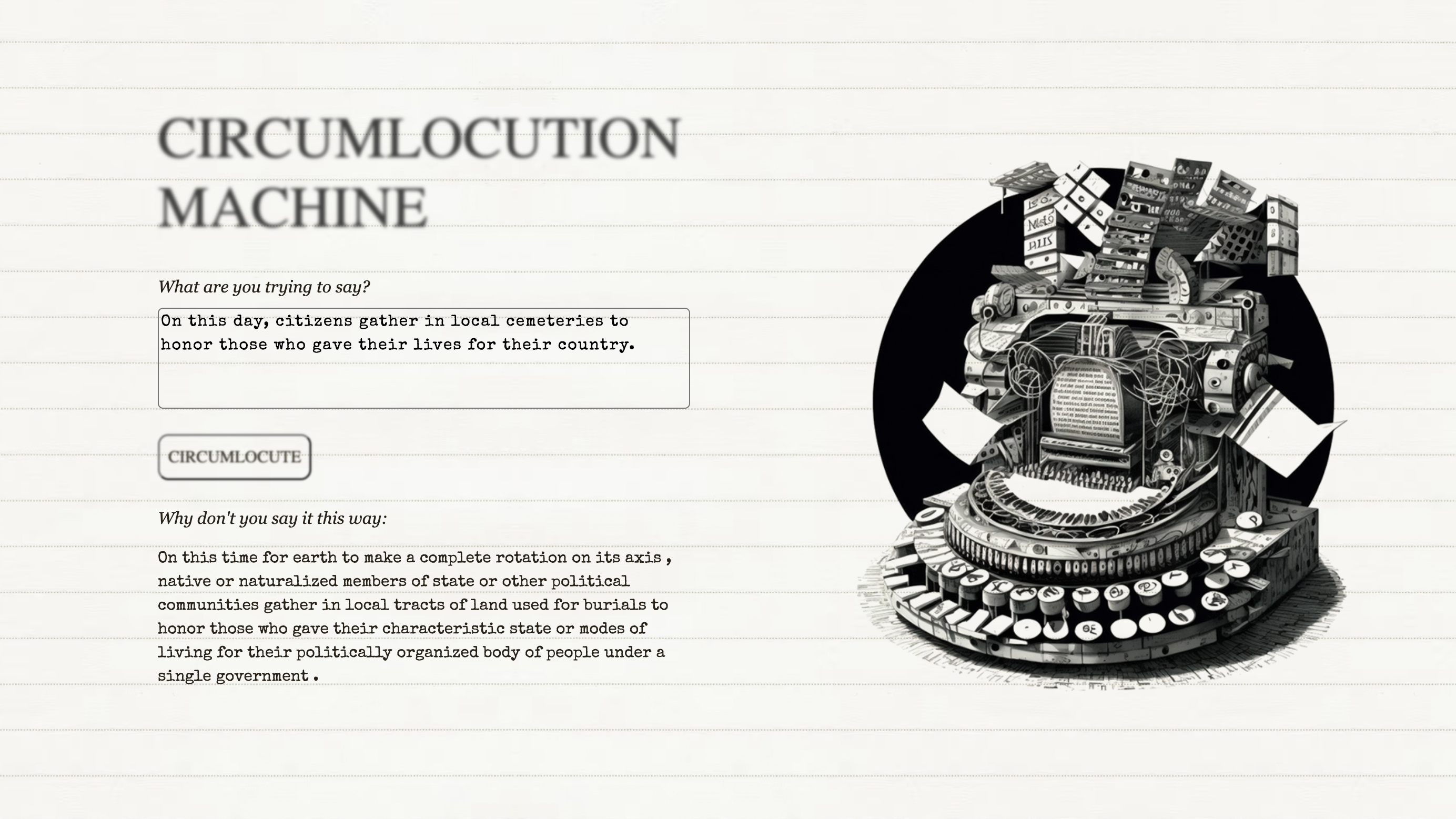

Circumlocution Machine

JDT: How about your Circumlocution Machine?

TX: It is less sound-related and more spoken language. That particular JavaScript language, the

meSpeak.js library, is the only one I could find online that allows overlapping voices. This is not

the smoothest one. Please let me know if anybody reading this knows anything better to use! It's not

very natural. It's more like a robotic voice, but it allows overlapping. I think that project is

also about language and about how people in power sometimes don't really say what they mean and

cover it with all different kinds of things. They use certain terms that we're so used to that we

don't think about what they actually mean.

A particular example would be the first screenshot of the single-user version that I put online. I

made this totally for fun, but then when I typed in this sentence, I realized that I touched on

something serious. I found a sentence just from some online news report that says, “On this day,

citizens gather in local cemeteries to honor those who gave their lives for their country.” And then

the sentence that the program uttered back was, “On this time for earth to make a complete rotation

on its axis, native or naturalized members of state or other political communities gather in local

tracts of land used for burials to honor those who gave their characteristic state or modes of

living for their politically organized body of people under a single government.” So that really

makes people question words that we're taking for granted, like, What is a citizen? What is a life,

and what does it mean to give your life to a country? What does a country mean?

JDT: And what does the future bring? Do you have any other web-based projects or something completely different?

TX: Actually, I am creating something non-web and non-sound. I’m in Buffalo doing a residency at the

Coalesce BioArt Lab. I'm going to cultivate slime mold, yeast, and other microorganisms, and have

live data from those guys streamed into the Unreal Engine, which is virtual reality, and then also

have dancers wearing wearable sensors. There's also going to be an instrumentalist. We’re going to

put an EEG that detects their brainwave, so as they play, the brainwave is also going to be streamed

into VR.

The project is called LUCA (Last Universal Common Ancestor), which is a hypothetical

single-celled organism from which all life on earth descended as a theoretical thing that hasn’t

been confirmed by scientists. I'm intrigued by the idea of collective agency. We're actually a whole

on this earth instead of separate units. All the current technology we have is usually very

anthropocentric. It’s designed for a human body. We're so used to this human-centric way of

thinking, and that's how we might neglect that there are other living creatures on earth, and it is

not what we call an eco-friendly way of living. It's more thinking from a non-anthropocentric

perspective. I'm still discovering this whole thing myself.

Maybe in the future, I'm going to develop something web-related for the same project because, with

websoundkit and OSC data, you can technically have a chat room connected to virtual reality as well.

It is possible in the future to use both the brainwave and typed-in language from a chat room to be

streamed into virtual reality at the same time as a performance happens. So if I have access to

powerful enough hardware, theoretically, I can have the audience members do live language input,

have it analyzed, and affect the environment in virtual reality as well as the physical space

(through OSC on grandMA or other lighting control interfaces) as the performance goes.

I think in the future I'm interested in using APIs and collaborating with scientists to have live data from planets or microorganisms communicate with humans in a way or have a shared experience with those things that are bigger than us.

JDT: Looking forward to seeing where that will lead you. Thanks, Tansy!

Some resources

- Tansy Xiao’s Website

- Here’s the information we collect

- Circumlocution Machine

- Control

- LUCA

- annyang.js

- mespeak.js

- ml5.js PoseNet

To Top ↑