We’ve covered a number of ways to work with audio on the web, mainly using Javascript libraries that make the Web Audio API a little easier to work with. But what if you’d like to work with a system similar to Max/MSP that has higher-level tools, but still work in code? A live coder, perhaps, or someone looking to use NodeJS to glue a lot of different web-based ideas together? In this article, we’ll explore some browser-based creative coding platforms enabled by WebAssembly.

I briefly touched on WebAssembly (WASM for short) in my tutorial on Max and the Browser, but let’s refresh. As opposed to Javascript, which is a fairly loose, dynamically typed language that sacrifices performance speed for faster development time and deep browser integration, WebAssembly is a “low-level assembly-like language with a compact binary format that runs with near-native performance.” Like binary, you’re not meant to write WebAssembly directly, but it’s instead a target for more performant languages like C, C++, or Rust to compile down to.

Delving into compiling C++ to WebAssembly is outside the scope of this tutorial, as we’d have to tackle not only writing C++, compiling it, and incorporating our code in a webpage, but also the higher-level DSP math involved. What I can do, however, is introduce a few creative coding systems that have a WASM binary we can leverage in our projects. This means you can get the performance boost of WASM without Max/RNBO’s $400 price tag!

Some of these systems/languages/platform have a web-based IDE (Integrated Development Environment). An IDE is a step above a code editor in that it typically includes helpful debugging tools relevant to the intent of the language in question, or at least points out syntax errors. This is often a good way of exploring the language features to see if they suit your workflow, and also a great hint that they likely can be separated from their IDE to use in a standalone web project.

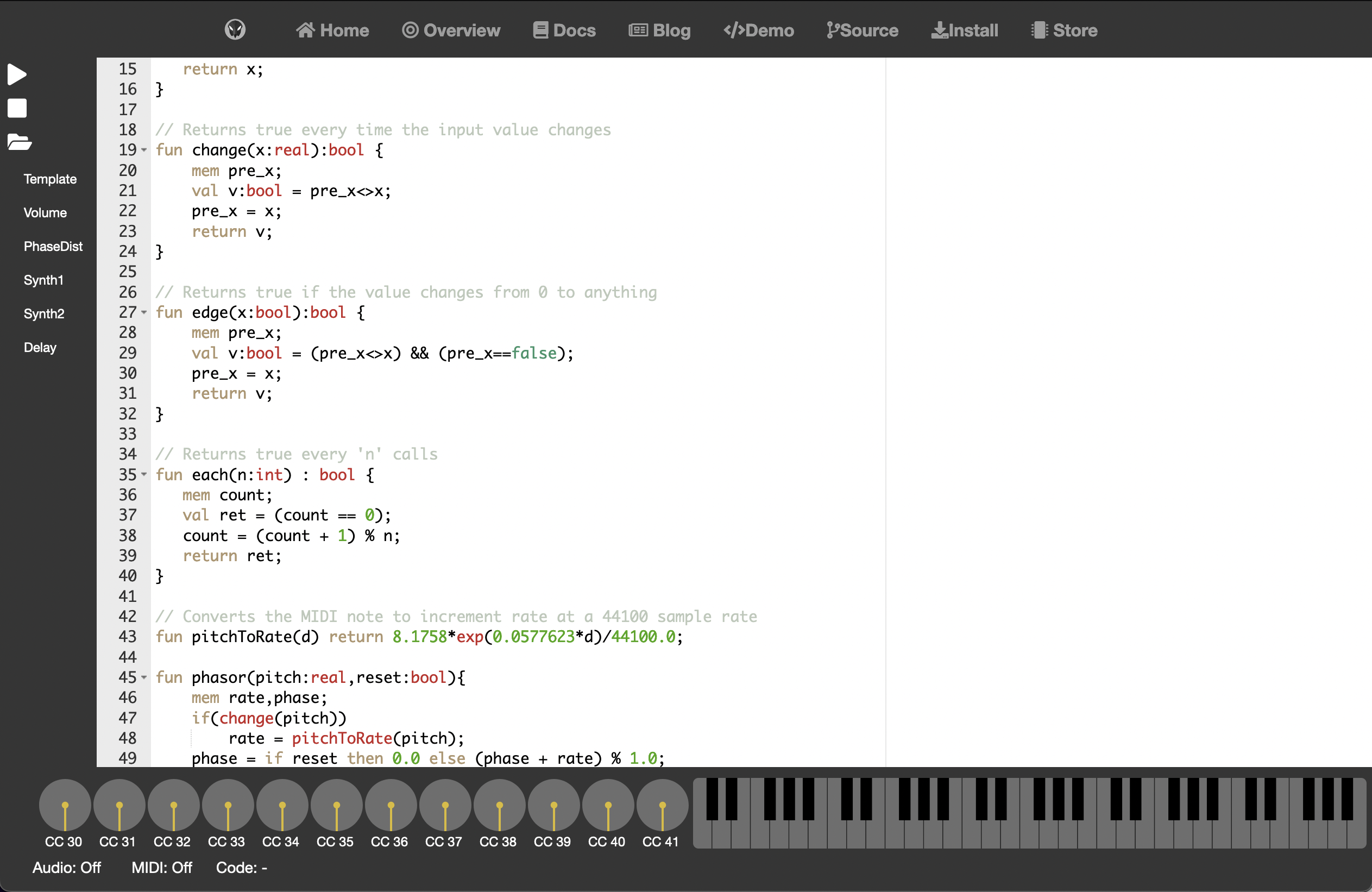

Faust

Rather than being a self-contained audio application like Max/MSP or Supercollider, Faust is an incredibly extensible language that can compile down to a host of different languages and targets - C, C++, WASM, Android/iOS apps, even to SVG block diagrams of connected audio nodes.

Faust also has a plethora of tools to experiment with the language, including a fully-featured online IDE that includes a spectroscope, range controls, and a block diagram, a more stripped-down online editor, and a node-based playground for instruments made with Faust. If you’d like to learn Faust, I recommend trying some examples in the IDE first. Languages purpose-built for audio tend to have a number of terse aliases for their most common functions, which can make reading them a bit challenging, but writing them a breeze. Here’s an example from their documentation (using their web-embeddable editor!):

Here, we’ve created two horizontal sliders. One of them is a volume control with a range from -48dB

to 0dB with a starting value of -24, which is converted to linear values and then smoothed (ba is short for basics, si.smoo is short for signals.smoo, a control-rate smoothing

function), while the other alters frequences from 20Hz to 8kHz.

I’ll break down the final line:

- Whatever is assigned to

processis the final output of your Faust code. vgrouphas to do with Faust’s auto-generated block diagrams; it’ll group its child elements vertically. Here, it names a new group “Oscillator,” which will contain both the sine oscillator and the gain multiplier inside it.- Without the final bit of hieroglyphics (

<: _,_), this would output in mono. The<:is a split operator, that splits the mono output of our oscillator to the first two available outputs of our main out. - The underscores means “go to this effect’s implicit input/output,” in this case meaning “go to the final output.” In this case, having two means we’re now in stereo.

We could replace one of those underscores with another effect - say, dm.cubicnl_demo,_, which will add a

distortion effect to your left ear only.

You can choose your own adventure as far as how you want to deploy your Faust code to the web, as

well. There’s a faust-web-component package, which

can embed your examples as editable code, or embed only the interactive elements (sliders, dials,

gate buttons), without the editor. I used faust-web-component above to embed the

oscillator example.

Alternatively, to use only the audio processing and design the rest of the page yourself, there’s a

faust-wasm package, for use with

NodeJS. If you’ve been following some of our previous tutorials and have installed Node, the faust-wasm package is fairly

straightforward. Here’s the basic instructions, copied from their GitHub with a bit more

explanation:

# Clone project, enter folder

git clone https://github.com/grame-cncm/faustwasm.git

cd faustwasm

# Install libraries & build project:

npm install

npm run build

# Generate files from mono.dsp Faust file

node scripts/faust2wasm.js test/mono.dsp test/out

While the package contains a library you can use to interface with the Faust WASM binary directly in

Javascript, the above faust2wasm.js will

take a Faust file, and generate all the files required to make a basic version of the project in

question. This includes an HTML file, a separate JS file that handles loading the WASM file, and the

WASM file itself. The HTML file it generates has only a single button, which starts/suspends the Web

Audio API’s audio context.

Once the files are generated in the test/out folder, you’ll want to preview them

in a localhost server. You can jump back to our first/second tutorials for some options on running a

localhost server.

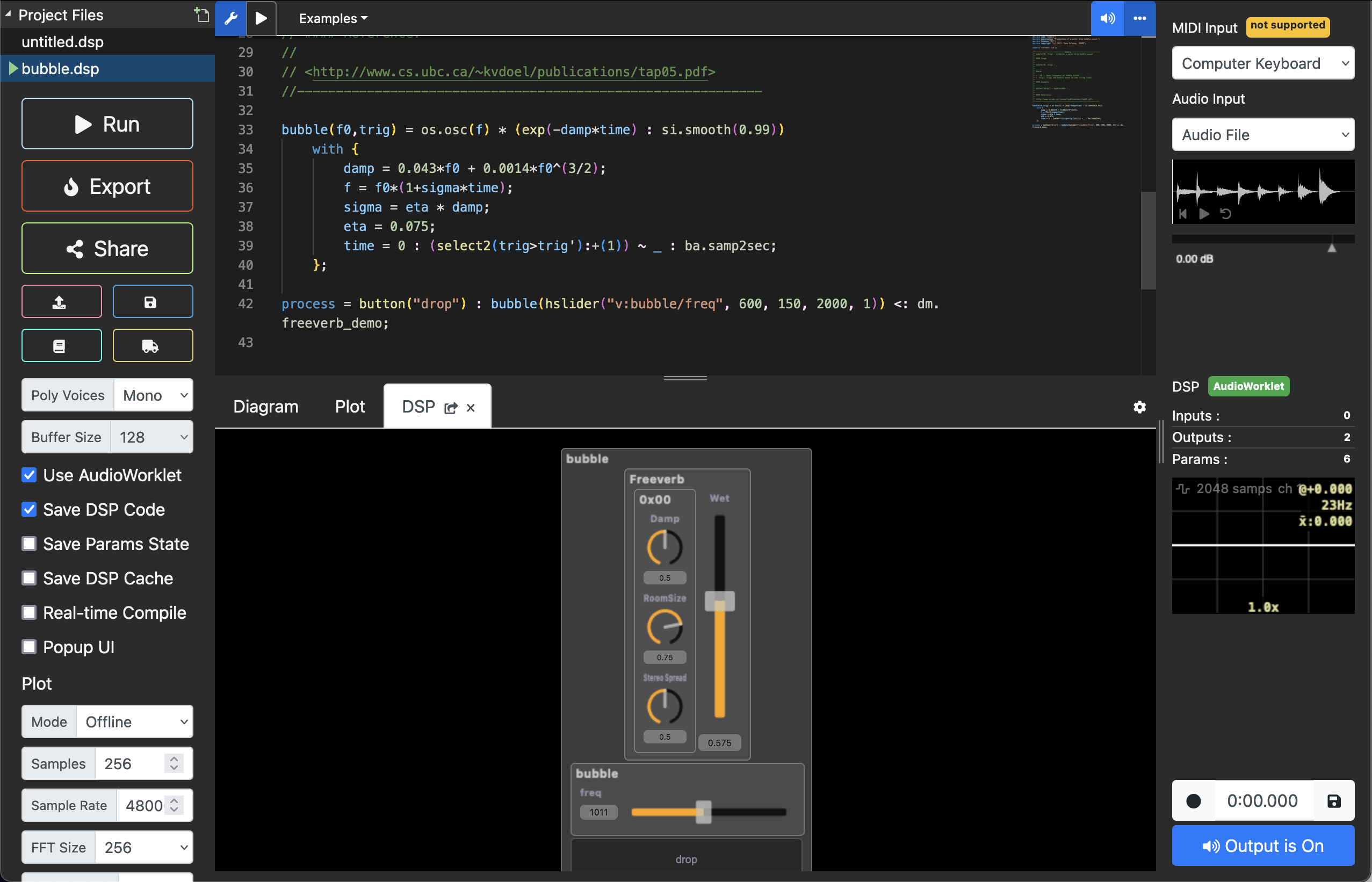

Csound

Faust has been around since the early 2000’s, but if you really want the OG imperative text-based sound coding language/platform, Csound has been around since 1985! As opposed to Faust, where all the aspects of your sounds can all live in the same file, Csound is split into an “instruments” section and a “score” section. These aren’t hard and fast rules, as Csound enables some wildly dynamic algorithmic patterns both in timbre and sonic organization.

But to keep it simple, here’s an example from their documentation of a sawtooth wave playing for three seconds before stopping:

<CsoundSynthesizer>

<CsOptions>

-o dac // real-time output

</CsOptions>

<CsInstruments>

sr = 44100 // sample rate

0dbfs = 1 // maximum amplitude (0 dB) is 1

nchnls = 2 // number of channels is 2 (stereo)

ksmps = 64 // number of samples in one control cycle (audio vector)

instr 1

iAmp = p4 // get p4 from the score line as amplitude

iFreq = p5 // get p5 from the score line as frequency

aOut = vco2:a(iAmp,iFreq) // sawtooth VCO

outall(aOut) // output to all channels

endin

</CsInstruments>

<CsScore>

// call instrument 1 in sequence

i 1 0 3 0.1 440

i 1 3 3 0.1 550

// call instrument 1 simultaneously

i 1 7 3 0.05 550

i 1 7 3 0.05 660

</CsScore>

</CsoundSynthesizer>

Here, we have one instrument (a voltage-controlled oscillator) in our instruments section. In the

score, “i 1” means “play the first instrument,” the next two numbers indicate starting time and

duration, and the final two are values to pipe into iAmp and iFreq. Csound doesn’t have an embeddable

mini-editor like Faust, but you can try

this example in their online IDE here.

Embedding Csound in a webpage via WASM is a bit less immediate than Faust, as there is no simple

compiler script that assembles all the necessary materials. If you’re familiar with Node, these instructions are fairly easy

to follow, as the only non-Csound parts involve installing Vite and adding the csound-browser library to your project.

As Csound’s default behavior is to play a score file, you may be wondering if it’s possible to make interactive controls, rather than having the Csound audio play back literally. Steven Yi’s “Learn Synthesis” page (view here, code here) has a good example of doing exactly this.

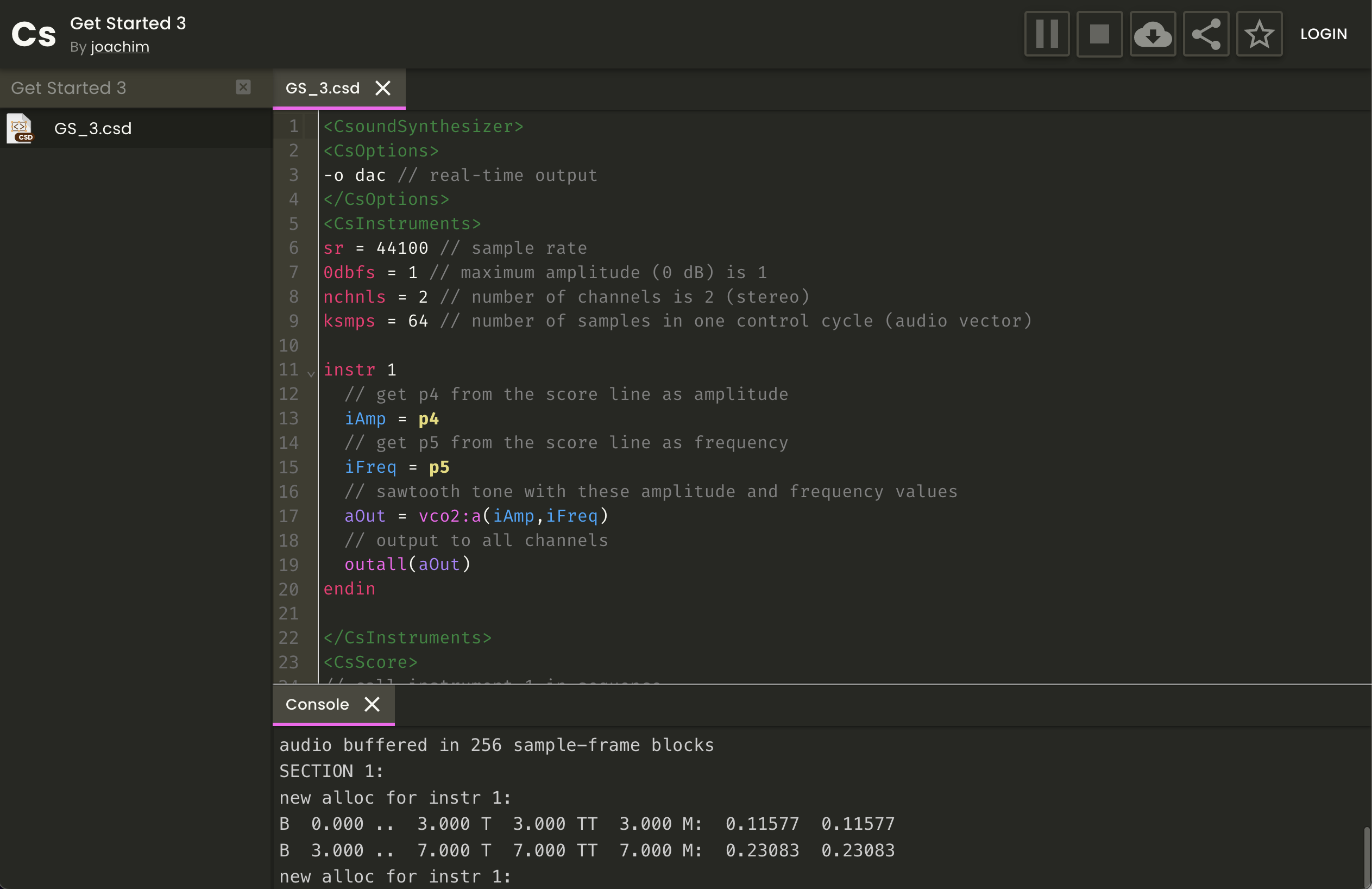

Vult

You may recognize Vult as a company rather than a language if you’ve worked with VCV Rack or similar modular synthesis systems before, as they make both digital and physical eurorack modules. All their modules are made with their open-source language, also called Vult.

Now, I’ll warn you - Vult is great if you’re familiar with DSP, but if you’re not, it’ll look pretty overwhelming. There are no libraries, so if you’d like to, say, create a sine oscillator and filter it, you have to make it yourself. Here’s a selection of the code in the example below, pulling out just what’s used to make a wavetable oscillator (missing several helper functions):

// Generates a wave table with the given harmonics

fun wave(phase) @[table(size=128, min=0.0, max=1.0)] {

val x1 = 1.0 * sin(phase * 1.0 * 6.28318530718);

val x2 = 0.5 * sin(phase * 2.0 * 6.28318530718);

val x3 = 0.3 * sin(phase * 3.0 * 6.28318530718);

val x4 = 0.3 * sin(phase * 4.0 * 6.28318530718);

val x5 = 0.2 * sin(phase * 5.0 * 6.28318530718);

val x6 = 0.1 * sin(phase * 6.0 * 6.28318530718);

return x1+x2+x3+x4+x5+x6;

}

// Produces a tuned (aliased) saw wave

fun phase(cv:real) : real {

mem rate;

if(change(cv)) {

rate = cvToRate(cv);

}

mem phase = phase + rate;

phase = if phase > 1.0 then phase - 1.0 else phase;

return phase;

}

// Converts a cv value (0.0 - 1.0) to a delta value used for oscillators

fun cvToRate(cv) @[table(size=32, min=0.0, max=1.0)] {

return pitchToRate(cvToPitch(cv));

}

// Returns true if the input value changes

fun change(x:real):bool {

mem pre_x;

val v:bool = pre_x <> x;

pre_x = x;

return v;

}

// inside process function:

fun process(input:real) {

// ...

val o1 = osc(0.3 + mod1);

// ...

return o1;

}

It’s daunting at first, but at the same time makes DSP algorithms a little more real. I’m not enough of a DSP expert to be able to interpret everything I’m seeing, but Vult makes me feel like I could! Here’s a simpler example from their VCV Rack playground Github page, to make a 4-channel mixer:

fun process(in1:real, in2:real, in3:real, in4:real) {

mem param1, param2, param3, param4;

val out1, out2, out3, out4 = 0.0, 0.0, 0.0, 0.0;

// all inputs mixed to out1

out1 = in1 * param1 + param2 * in2 + param3 * in3 + param4 * in3;

return out1, out2, out3, out4;

}

Easy enough, right? Multiply every signal by its gain, and add them all together. Your best bet getting your feet wet with Vult is going to the examples folder in the GitHub source and then trying them out in the online IDE. Sometimes the documentation is a bit shaky, and there are a few global libraries that don’t seem to work in the IDE. If you’re trying to dig a little deeper into DSP but don’t feel comfortable with C++ or JUCE, Vult might be perfect for you!

The process of running Vult as a WASM build is very similar to Faust’s. A Node library is used to

compile a .vult file and generates a page

for you. Here’s the example page provided, and

here’s the GitHub page with full instructions.

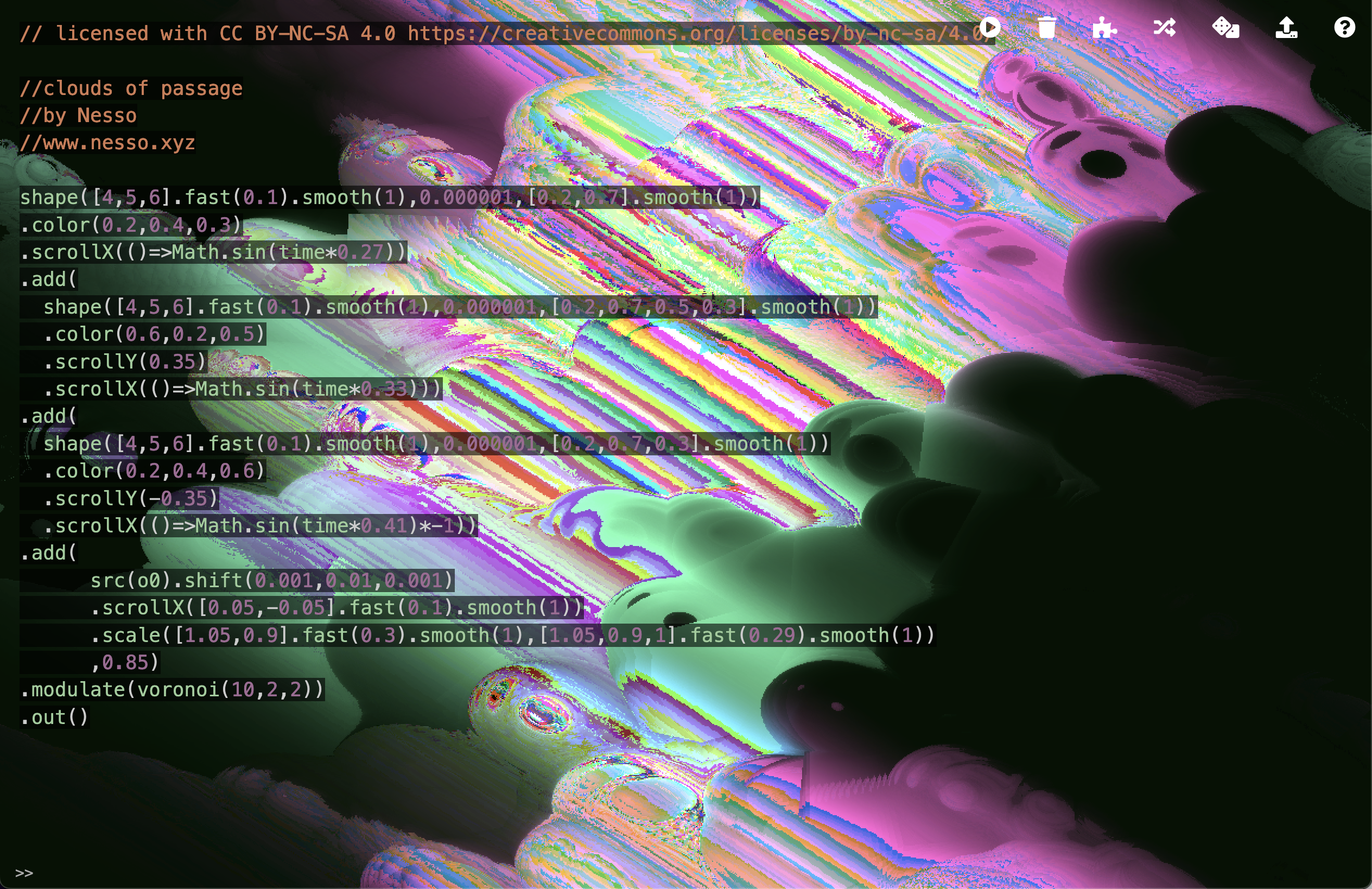

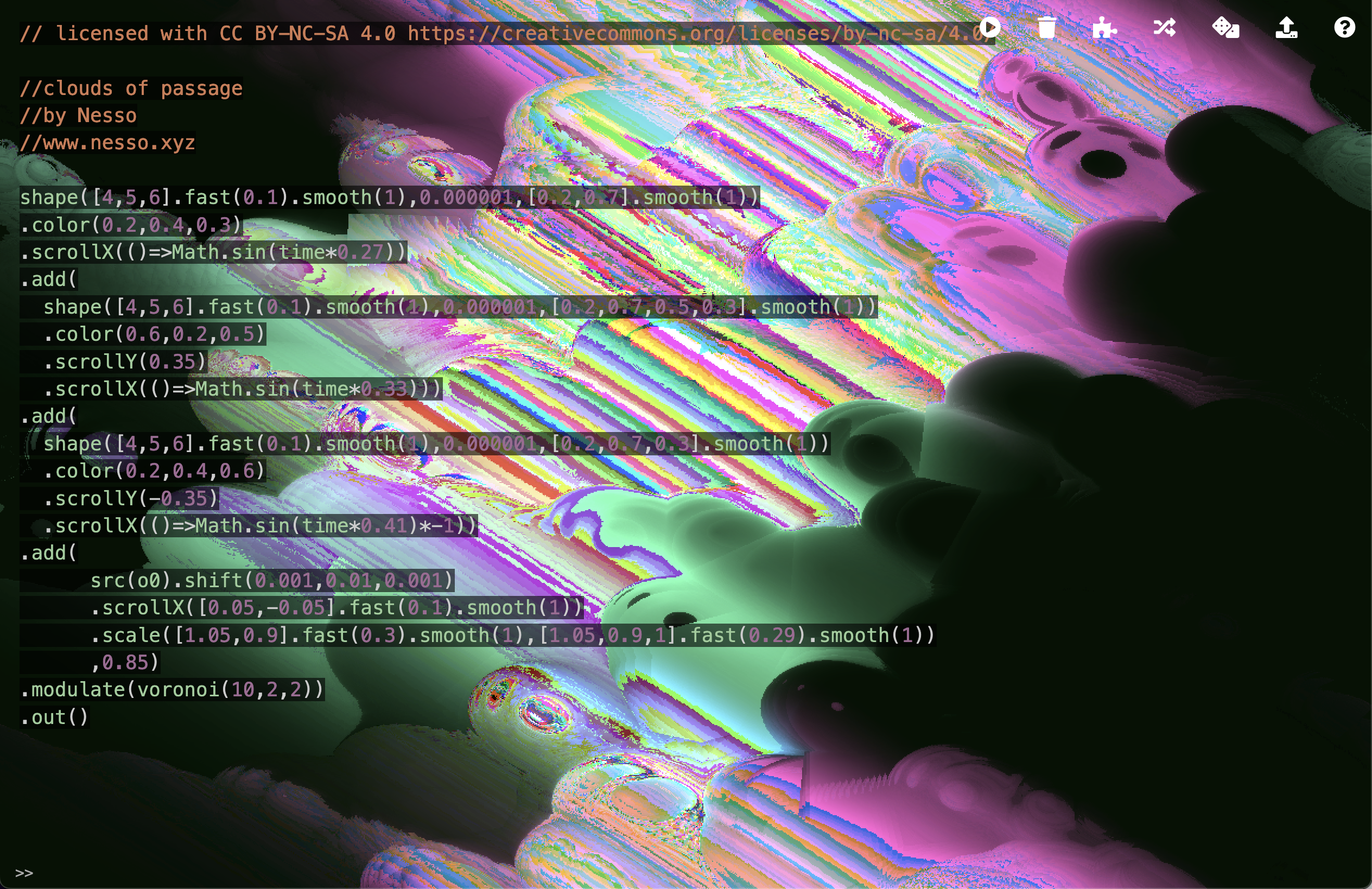

Hydra

This is a bit of a detour, but what if you were interested in not just live coding audio, but also visuals? You should try hydra! If you’re familiar with the basic ideas of modular synthesis, namely controlling oscillators with other oscillators and/or some amount of external input, you’ll find this both familiar and unfamiliar at the same time.

hydra allows for live-coding, and you can code in tandem with someone else on the same network as you. Here’s the second-most basic patch provided in their documentation:

osc(20, 0.1, 0.8).rotate(0.8).out()

Click here to witness a slow, blue-to-orange oscillator pan diagonally across your screen.

I’m cheating a little here, because not only is this not audio, but it’s also not embeddable via WASM! Embedding hydra uses the separate hydra-synth JS library, which has all of the visual processing, and none of the networking. You can view the instructions here.

SuperCollider?

Sadly, SuperCollider has no WASM build! There are threads on the topic, but the only extant build was a fork made by one individual, and isn’t part of larger SC development. There’s also supercollider.js, but it still requires you to have the SuperCollider server running on the computer accessing the webpage.

Conclusions

If you’re having trouble choosing which of four options to try, here’s some thoughts:

- If you want something similar to p5.js, Tone.js, or Supercollider, Faust is your best bet. It compiles to a number of other targets apart from the web, so your work could be ported to other devices without needing to change your main synthesis code.

- If you’d like a language purpose-built to play a piece, which can be an incredibly dynamic, algorithmic experience, and has incredible potential as far as sonic variety, give Csound a try. If you’d like immediate user interactivity, Csound will take a little more work.

- If you’re into DSP or would like to get into DSP, but want something a little more readable than C++, use Vult. Like Faust, Vult also compiles to a number of other targets, including making your own VCVRack modules, or exporting to Teensy boards for making hardware eurorack modules.

- If you’d like to try visual analog synthesis that’s embeddable in the browser, use hydra.

On interactivity: Faust and Vult are the most amenable to external inputs controlling their audio

sources, and both of their IDEs also include controls for experimenting with ranged inputs and/or

MIDI inputs. Csound’s WASM build includes a csound.setControlChannel function, though

hydra requires re-running the script if changes are made.

To Top ↑